Notes on Policy Gradient Methods

Some notes on Chapter 13 - Policy Gradient Methods from Sutton & Barto’s book Reinforcement Learning: An Introduction in 2017.

1. The Framework of the RL Book

The book Reinforcement Learning: An Introduction provides a clear and simple account of the key ideas and algorithms of reinforcement learning. The discussion ranges from the history of the field’s intellectual foundations to some of the most recent developments and applications. A rough roadmap is shown as follow. (Not exactly the same as the contents.)

- Tabular Solution Methods

- A Single State Problem Example: Multi-armed Bandits

- General Problem Formulation: Finite Markov Decision Processes

- Solving Finite MDP Using:

- Dynamic Programming

- Monte Carlo Methods

- Temporal-Difference Learning

- Combining:

- MC Methods & TD Learning

- DP & TD Learning

- Approximate Solution Methods

- On-policy:

- Value-based Methods

- Policy-based Methods

- Off-policy Methods

- Eligibility Traces

- Policy Gradient Methods (we are here)

- On-policy:

2. Notes on Policy Gradient Methods

2.1 What’s Policy Gradient?

Almost all the methods in the book have been action-value methods until Chapter 13. In this chapter, a parameterized policy is learned, with or without learning a value function.

Policy Gradient is:

- Methods that learn a parameterized policy that can select actions without consulting a value function.

- The parameters are learned based on the gradient of some scalar performance measure J(θ) with respect to the policy parameter.

- The general scheme of an update in policy gradient methods looks like this. It seeks to maximize performance.

There might or might not be a learned value function. If both the approximations of policy and value function are learned, then it’s called an actor-critic method. (‘critic’ is usually a state-value function)

is a stochastic estimate whose expectation approximates the gradient of the performance measure, with respect to its argument . This is an important rule that all the variants of policy gradient methods should follow:

Why don’t we use the gradient of the performance directly?

A proper selection of performance function is the state-value function . Let’s define the performance measure as the value of the start state of the episode, . The performance is affected by the action selections as well as the distribution of states, both off which are functions of the policy parameter. It’s not hard to compute the effect of the policy parameter on the action and reward given a state. But the state distribution is actually a function of the environment and is typically unknown.

Note that the following discussion is for the episodic case with no discounting , without losing meaningful generality. The continuous case has almost the same property. The only difference is that if we accumulate rewards in the continuous case, we will finally get . It’s meaningless to optimize an infinite measure.

2.1 Monte Carlo Policy Gradient and the Policy Gradient Theorem

Monte Carlo Policy Gradient, also called Vanilla Policy Gradient or REINFORCE, has a simple form given as:

is a learned approximation to . It’s so called ‘critic’ and is parameterized by .

That is, we let .

Recall the standard for a function to become a performance measure. Surely that this equation should be satisfied:

or

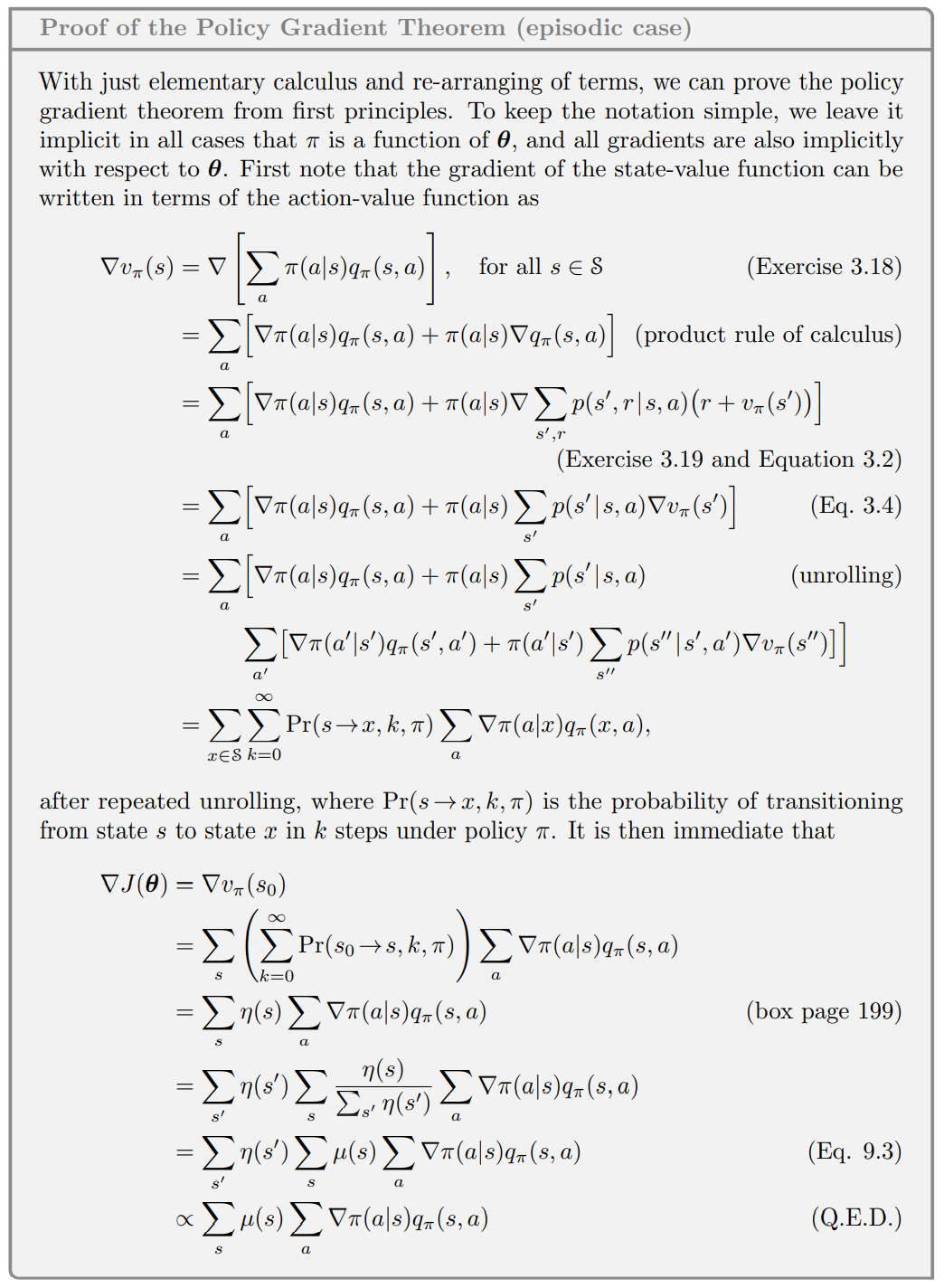

The proof of this is what the Policy Gradient Theorem is all about. The derivation is a lot of fun.

2.1.1 The Policy Gradient Theorem (episodic case)

Figure 1

Under construction

An optional caption for a math block

2.1.2 Monte Carlo Policy Gradient Algorithm

Under construction